Introduction

Artificial Intelligence (AI) is changing everything going around in the world. It is Modifying industries, and reshaping the way we live and work. AI is working efficiently in various fields like as automated customer service bots medical diagnosis tools and finance. But as AI technology is getting more advanced it also brings complex challenges that society must point out. One of the most pressing issues is how AI affects individual privacy.

The tension between AI’s transformative power and the need to protect personal privacy and security is undeniable. We see that AI is giving exceptional opportunities for data-driven work giving smarter and efficient systems. On the other hand, the large volume of personal data needed for AI algorithms has significant risks to individual privacy.

This blog explores the relationship between AI and privacy. We will be highlighting the delicate balance that must be maintained. We will look into how AI systems interact with personal data and will examine the ethical and security concerns that arise.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Privacy concerns with AI:

We know that When it comes to privacy concerns with AI the scope and scale of data collection and usage is overwhelming. AI systems depend on large amounts of data. A question arises here in our minds why ai need the data? Well, they need it to learn, adapt, and deliver personalized experiences. But the major concern is,this high demand for data raises serious questions about what is collected and how it is used.

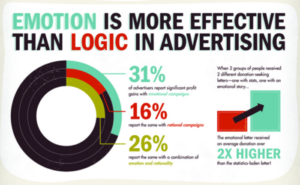

AI systems also collect information from many sources. These sources are social media platforms, search engines, wearable devices, and more. They analyze everything from our online patterns and shopping habits to our location and even our biometrics. This data is used to improve AI algorithms. it also creates targeted advertising and personalizes user experiences. On the other hand the huge volume of personal information collected opens the door to a large number of privacy risks.

Risk to individual privacy:

Data breaches are one of the most common privacy risks related to AI. As AI systems depend on large data sets, they become attractive targets for hackers. A breach can lead to the exposure of sensitive personal information. It causes harm to individuals and fades trust in technology. Another important factor is even without a breach, there is always the risk of misuse or unauthorized sharing of personal data. Companies may use the data in ways that were not originally sold to third parties without proper consent.

Another important concern is surveillance. AI-powered technologies such as facial recognition and tracking systems, can monitor an individual’s movements and activities with unsettling precision. These technologies have many uses, such as in security and law enforcement. Hence their potential for abuse and interruption of privacy is high. There are chances that it can create a society where individuals feel constantly watched. This invasion of privacy will lead to a chilling effect on personal freedom and autonomy.

Examples of AI privacy violations

Examples of AI privacy violations are not very hard to find. There are many incidents where we have seen the invasion of privacy From high-profile data breaches that expose millions of users’ personal information to cases where AI systems have been used for prying surveillance. When we look closely at the Cambridge Analytica scandal where personal data from Facebook was used without users’ explicit consent which leads to a major breach of trust. Or you can take the case of facial recognition technology being used by law enforcement without sufficient monitoring.it is raising concerns about racial profiling and wrongful arrests.

These examples show the need for strong privacy protections and regulatory frameworks to ensure AI technology does not interfere with individual rights. We are well aware that AI continues to upgrade addressing privacy concerns must be at the top of the conversation.

Security Measures to Protect Privacy in AI

When it comes to protecting privacy in AI, robust security measures are a must. A key strategy is encryption, which scrambles data to make it unreadable without the correct decryption key. By securing data at rest and in transit, organizations can protect sensitive information from unauthorized access or data breaches. This practice is especially important when AI systems process personal or sensitive data.

Anonymization is another critical technique. It involves removing or masking identifiable information from data sets, making it difficult to trace the data back to individuals. This allows AI systems to analyze patterns and make predictions without compromising user privacy. Anonymization is commonly used in research and analytics to ensure compliance with privacy regulations.

Secure data sharing is a growing concern as AI systems often need to share information across different platforms or organizations. To maintain privacy, companies can use secure protocols and limit access to authorized personnel only. Data-sharing agreements that framework privacy expectations and responsibilities are also important to ensure data remains secure.

Monitoring and auditing AI systems are important for ongoing privacy compliance. Regular audits can identify potential vulnerabilities and ensure that AI systems stick to privacy policies and regulations. Continuous monitoring allows organizations to detect and respond to suspicious activity. This will reduce the risk of data breaches or misuse.

The Future of AI and Privacy Concerns

When we look further many rising trends could affect the relationship between AI and privacy. if we look closely at some privacy-enhancing technologies like allied learning and homomorphic encryption are gaining hold. These technologies help AI systems to learn from data without directly accessing it. Also, provides a more privacy-friendly approach to AI development.

Ethical AI is another very popular trend that’s getting lots of popularity. It involves using ethical principles in AI design and development to make sure technology goes with societal values. This includes analysis for fairness, transparency, and accountability in AI.

Collaboration and dialogue among stakeholders will be much needed as AI technology continues to change. With the collaboration between industry, government, and civil society, stakeholders can work together to get to know AI and privacy challenges. Open dialogue can help to understand better regulations, industry standards, and public understanding of AI’s impact on privacy.

Conclusion

We have concluded that AI is transforming industries and making our lives better. But it also raises serious privacy concerns. we have discussed the challenges related to AI’s extensive data collection and usage. We have also emphasized the risks of data breaches, unofficial data sharing, and surveillance. We have also explored the current regulatory frameworks like GDPR and CCPA, getting to know about the difficulties in keeping up with AI’s growth.

We then looked closely at the strategies to balance technology and privacy focusing majorly on Privacy by Design, data minimization as well as the importance of transparency and accountability. For the privacy protection Security measures such as encryption and anonymization are very important in AI. we have seen that monitoring and auditing secure compliance with privacy regulations.

In the future AI and privacy will entirely depend on emerging technologies that rank privacy and ethical AI practices. It is important to have Collaboration among industry, and government to solve the complex challenges AI presents.

It is concluded that balancing AI with privacy and security is not just a technical challenge it’s a social issue. We can solve this problem in collaboration, implementing robust security measures, and adopting ethical AI practices.